K-means clustering

Suppose we have a data set consisting of observations of a random dimensional space, the goal

is to partition the data set into some number of clusters, formally let be a set of dimensional vectors in which

is associated with the cluster ( can be thought as the centres of the clusters). The goal is to find

an assignment of data points so that the distance of each data point to its closest vector is a minimum.

Let where describing the assignment of each data point to a cluster (1 if it’s assigned to a cluster and

0 if not), we define a function called distortion measure given by

Which represent the sum of the squares of the distances of each data point to its assigned vector , our goal is to find values

for and the so as to minimize , the algorithm is as follows:

Algorithm:

- pick initial values for the

- minimize J with respect to keeping the fixed (Expectation)

- minimize J with respect to the keeping fixed (Maximization)

Multivariate gaussian distribution

For a random variable with a finite number of outcomes occurring with probabilities the expectation of is defined as:

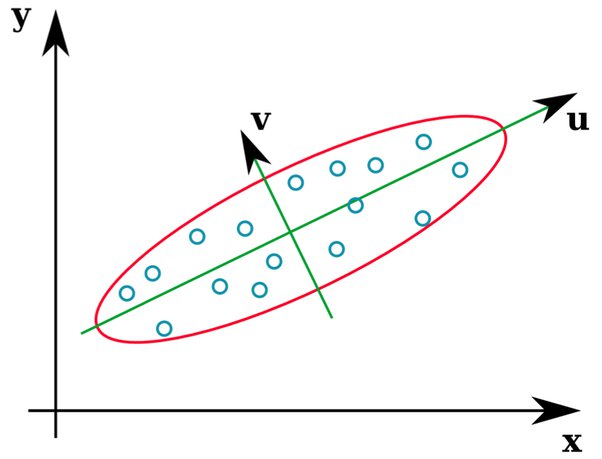

The covariance between two variables is defined as the expected value (or mean) of the product of their deviations from their individual expected values

When working with multiple variables the covariance matrix denoted as is the matrix whose th entry is

The density function of a univariate gaussian distribution is given by:

- is always positive

- the value is a always negative, it’s a parabola pointing downward

- the part makes sure that the quantity is always >= 0

- the normalization factor multiples so that this sum equals 1

A vector random variable is said to have a multivariate gaussian distribution with mean and covariance matrix

if its probability density function is given by

Like in the univariate case the argument of the exponential function is a downward opening bowl, the coefficient in front

is a normalization factor used to ensure that

Gaussian mixture models and EM

https://www.youtube.com/watch?v=qMTuMa86NzU